Old, but I'm not that old. Young, but I'm not that bold. And I don't think the world is sold. I'm just doing what we're told. (Counring stars - OneRepublic)

miércoles, diciembre 10, 2014

AsciidoctorJ 1.5.2 released

Etiquetas: asciidoctor, asciidoctorj

Arquillian + Java 8

Creating custom Assets

ShrinkWrap comes with some already defined assets like Url, String, Byte, Class, ... but sometimes you may need to implement your own Asset.

In this case we are using an inner-class, but because Asset class can be considered a functional interface (only one abstract method), we can use Lambdas to avoid the inner class.

So much simple and more readable.

Parsing HTML tables

Your code prior to Java 8 will look something like:

But in Java 8, with the addition of streaming API, the code becomes much easier and readable:

So as you can see Java 8 can be used not only in business code but also in tests.

We keep learning,

Alex.

See yourself, You are the steps you take, You and you, and that's the only way (Owner Of A Lonely Heart - Yes)

Music: https://www.youtube.com/watch?v=lsx3nGoKIN8

Etiquetas: arquillian, java 8, lambda, method references

martes, diciembre 09, 2014

RESTful Java Patterns and Best Practices Book Review

Etiquetas: book review, packt, restful web services

domingo, noviembre 30, 2014

Arquillian Cube. Let's zap ALL these bugs, even the infrastructure ones.

- Because Docker is like a cube

- Because Borg starship is named cube and well because we are moving tests close to production we can say that "any resistance is futile, bugs will be assimilated".

DOCKER_OPTS="-H tcp://127.0.0.1:2375 -H unix:///var/run/docker.sock"

$ docker -H tcp://127.0.0.1:2375 version

Client version: 0.8.0

Go version (client): go1.2

Git commit (client): cc3a8c8

Server version: 1.2.0

Git commit (server): fa7b24f

Go version (server): go1.3.1

And the test:

And finally arquillian.xml is configured:

(1) Arquillian Cube extension is registered.

(2) Docker server version is required.

(3) Docker server URI is required. In case you are using a remote Docker host or Boot2Docker here you need to set the remote host ip, but in this case Docker server is on same machine.

(4) A Docker container contains a lot of parameters that can be configured. To avoid having to create one XML property for each one, a YAML content can be embedded directly as property.

(5) Configuration of Tomcat remote adapter. Cube will start the Docker container when it is ran in the same context as an Arquillian container with the same name.

(6) Host can be localhost because there is a port forwarding between container and Docker server.

(7) Port is exposed as well.

(8) User and password are required to deploy the war file to remote Tomcat.

Come on now, who do you, Who do you, who do you, who do you think you are?, Ha ha ha, bless your soul, You really think you're in control? (Crazy - Gnarls Barkley)Music: https://www.youtube.com/watch?v=bd2B6SjMh_w

Etiquetas: Apache Tomcat, arquillian, arquillian cube, Docker, integration tests

martes, septiembre 23, 2014

Java EE + MongoDb with Apache TomEE and Jongo Starter Project

Know MongoDB and Java EE, but you don't know exactly how to integrate both of them? Do you read a lot about the topic but you have not found a solution which fits this purpose? This starter project is for you:

- Jongo as MongoDB Mapper (www.jongo.org).

- Apache TomEE as application service and integration. (tomee.apache.org)

- Arquillian for tests. (www.arquillian.org)

If you are going to use other application server you can change this approach to the one provided by it, or if you want you can use DeltaSpike extensions or your own method. Also because MongoClient database is get from a method annotated with @Produces you can be injected it wherever you want on your code, so you can skip the abstract services layer if you want.

You can clone the project from https://github.com/lordofthejars/tomee-mongodb-starter-project

We keep learning,

Alex.

I'm driving down to the barrio, Going 15 miles an hour cause I'm already stoned, Give the guy a twenty and wait in the car, He tosses me a baggie then he runs real far (Mota - The Offspring)

Music: https://www.youtube.com/watch?v=xS5_TO8lyF8

Etiquetas: Apache TomEE, arquillian, CDI, CRUD, java ee, mongodb, spring data

jueves, septiembre 18, 2014

Apache TomEE + Shrinkwrap == JavaEE Boot. (Not yet Spring Boot killer but on the way)

Do you believe in life after love, I can feel something inside me say, I really don't think you're strong enough, Now (Believe - Cher)

Etiquetas: Apache TomEE, arquillian, embedded, java ee, shrinkwrap, spring boot

jueves, septiembre 11, 2014

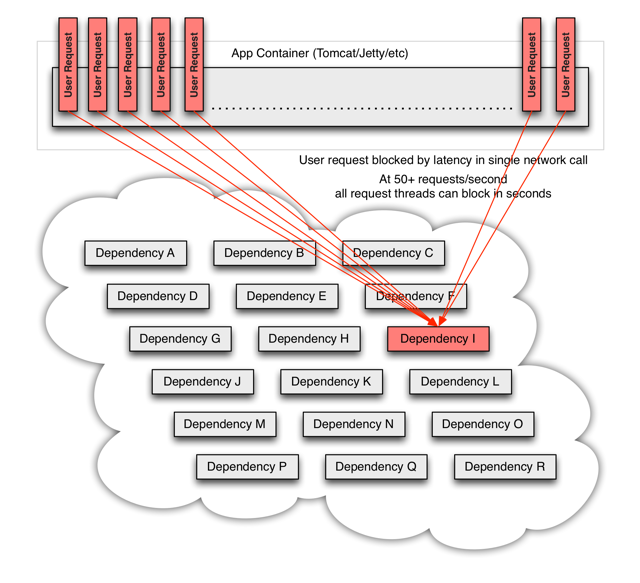

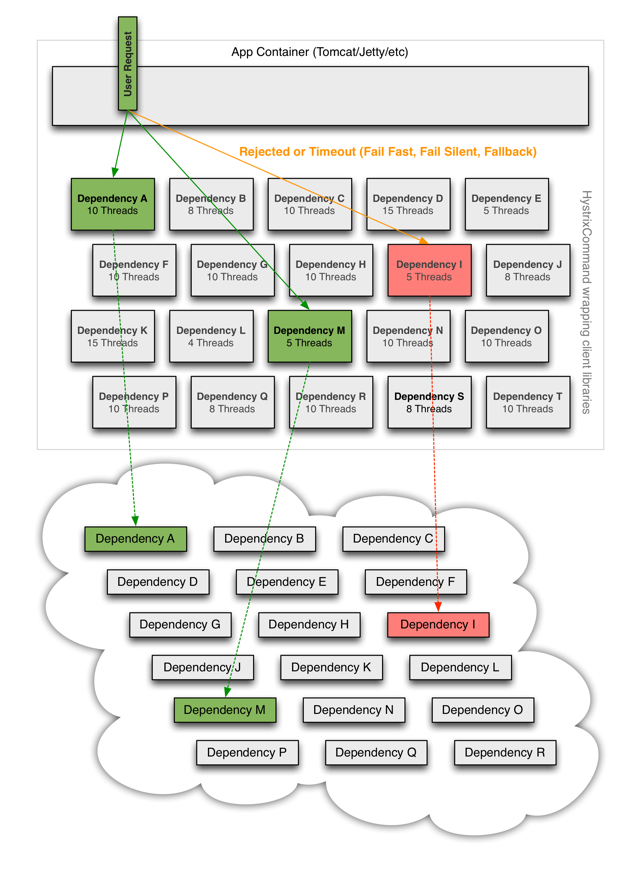

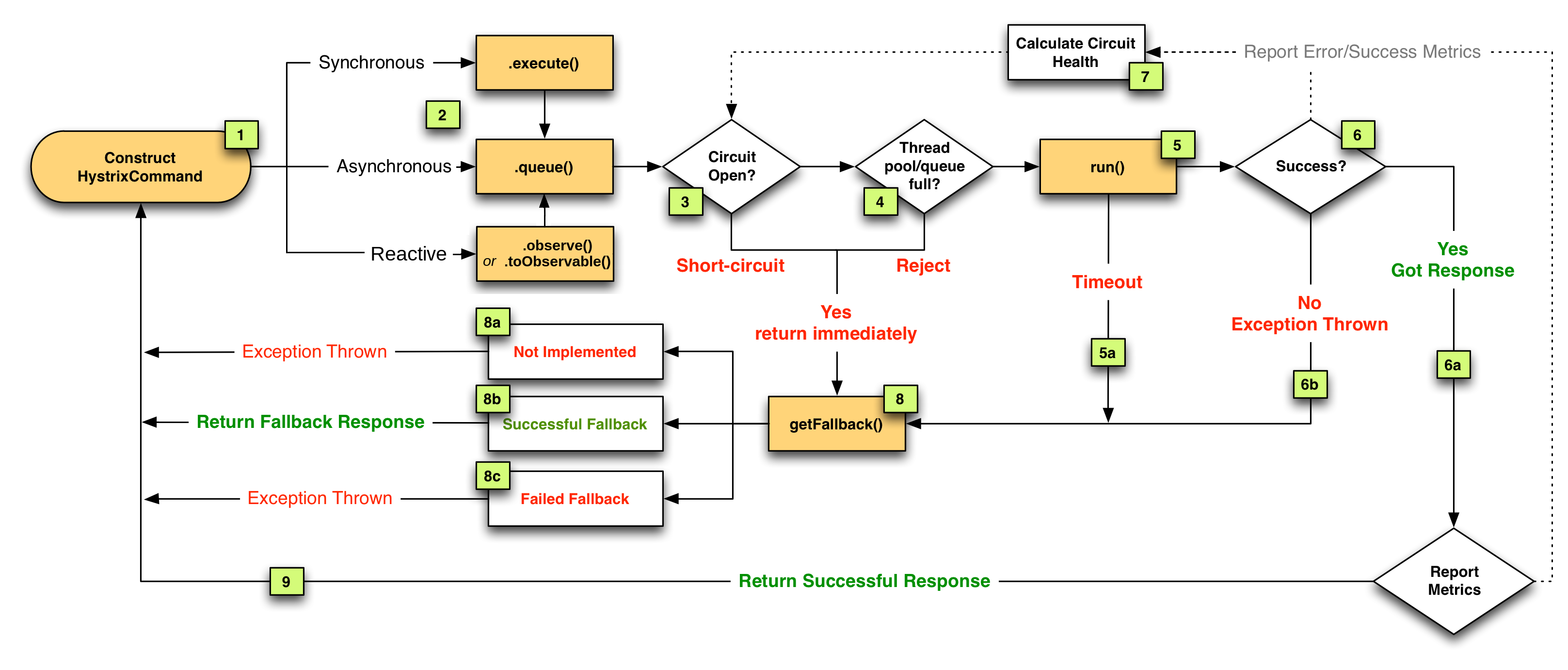

Defend your Application with Hystrix

- Each thread has a timeout so a call may not be infinity waiting for a response.

- Perform fallbacks wherever feasible to protect users from failure.

- Measure success, failures (exceptions thrown by client), timeouts, and thread rejections and allows monitorizations.

- Implements a circuit-breaker pattern which automatically or manually to stop all requests to an external service for a period of time if error percentage passes a threshold.

- A method to do something in case of a service failure. This method may return an empty, default value or stubbed value, or for example can invoke another service that can accomplish the same logic as the failing one.

- Some kind of logic to open and close the circuit automatically.

Sing us a song, you're the piano man, Sing us a song tonight , Well, we're all in the mood for a melody , And you've got us feelin' alright (Piano Man - Billy Joel)

Etiquetas: arquillian, distributed systems, fault tolerance, hystrix, java, latency

martes, julio 08, 2014

RxJava + Java8 + Java EE 7 + Arquillian = Bliss

- One service to get book details. They could be retrieved from any legacy system like a RDBMS.

- One service to get all comments written in a book and in this case that information could be stored in a document base database.

Also it is required to call the onCompleted method when all logic is done.

Notice that because we want to make observable asynchronous apart of creating a Runnable, we are using an Executor to run the logic in separate thread. One of the great additions in Java EE 7 is a managed way to create threads inside a container. In this case we are using ManagedExecutorService provided by container to span a task asynchronously in a different thread of the current one.

Then we need to create an observable in charge of zipping both responses when both of them are available. And this is done by using zip method on Observable class which receives two Observables and applies a function to combine the result of both of them. In this case a lambda expression that creates a new json object appending both responses.

Let's take a look of previous service. We are using one of the new additions in Java EE which is Jax-Rs 2.0 asynchronous REST endpoints by using @Suspended annotation. Basically what we are doing is freeing server resources and generating the response when it is available using the resume method.

And finally a test. We are using Wildfly 8.1 as Java EE 7 server and Arquillian. Because each service may be deployed in different server, we are going to deploy each service in different war but inside same server.

So in this case we are going to deploy three war files which is totally easy to do it in Arquillian.

Furthermore we have seen how to use some of the new additions of Java EE 7 like how to develop an asynchronous Restful service with Jax-Rs.

In this post we have learnt how to deal with the interconnection between services andhow to make them scalable and less resource consume. But we have not talked about what's happening when one of these services fails. What's happening with the callers? Do we have a way to manage it? Is there a way to not spent resources when one of the service is not available? We will touch this in next post talking about fault tolerance.

We keep learning,

Alex.

Bon dia, bon dia! Bon dia al dematí! Fem fora la mandra I saltem corrents del llit. (Bon Dia! - Dàmaris Gelabert)Music: https://www.youtube.com/watch?v=BF7w-xJUlwM

Etiquetas: arquillian, java ee7, java8, reactive extensions, rxjava

lunes, junio 09, 2014

Injecting properties file values in CDI using DeltaSpike and Apache TomEE

Sora ni akogarete sora wo kakete yuku, Ano ko no inochi wa hikoukigumo (The Wind Rises - Joe Hisaishi, Yumi Arai)

Etiquetas: Apache TomEE, CDI, DeltaSpike, java ee, properties file

lunes, mayo 19, 2014

Java Cookbook Review

Testing Polyglot Persistence Done Right [slides]

And here you can see the slides (http://www.slideshare.net/asotobu/testing-polyglot-persistence-done-right). If you have any question don't hesitate to approach us.

Alex.

En los mapas me pierdo. Por sus hojas navego. Ahora sopla el viento, cuando el mar quedó lejos hace tiempo. (Pájaros de Barro - Manolo García)

Music: https://www.youtube.com/watch?v=9zdEXRKJSNY

Etiquetas: arquillian, arquillian persistence extension, geecon, nosql, nosqlunit, persistence layer tests, sql, test

lunes, abril 21, 2014

JPA Tip: Inject EntityManager instead of using PersistenceContext

Almost all projects you develop with Java EE will contain a persistence layer and probably you will use JPA for dealing with SQL systems. One way to get an EntityManager is using @PersistenceContext. In this post we are going to explore the advantages of injecting EntityManager instead of using PersistenceContext annotation.

We keep reading,

Alex

Pistol shots ring out in the bar-room night, Enter Patty Valentine from the upper hall, She sees a bartender in a pool of blood, Cries out, "My God, they killed them all" (Hurricane - Bob Dylan)

Music: https://www.youtube.com/watch?v=hr8Wn1Mwwwk

Etiquetas: Apache TomEE, arquillian, CDI, java persistence, jpa

lunes, abril 14, 2014

Docstract-0.2.0 Released.

- if include contains a java file, like first example, the whole class is read and inserted within AsciiDoc source code blocks.

- if include contains a java file but with # symbol, like second one, the right part of # will be used as method name of given class. So for example Project#setId(String, String) will include a method of Project's class called setId and receiving two string parameters.

- if include contains an xml file, the file is inserted "as is" within AsciiDoc source code block.

- if include contains an xml file and between brackets there is an xpath expression, the result of that expression will be inserted.

- any other include is left "as is", so it will be processed by AsciiDoc(tor) processor.

Also note that the include files will be resolved relative from place where the CLI is being run, typically from the root of the project.

You can use callouts inside java and xml code and the processor will render it in the proper way.

In java you can write a callout as a single line comment with callout number between <, > at start.

To render previous class we can call as:

java -jar docstract-<version>.jar --input=src/test/java/com/lordofthejars/asciidoctorfy/MM.java --output=README.adoc

Alex.

Weiß mit dem Locken umzugehn, Und mich auf's Pfeifen zu verstehn. Drum kann ich froh und lustig sein, Denn alle Vögel sind ja mein. (Die Zauberflöte - Wolfgang Amadeus Mozart)Music: https://www.youtube.com/watch?v=PRtvVQ1fq8s

Etiquetas: Apache TomEE, asciidoc, asciidoctor, documentation, tomitribe

miércoles, marzo 26, 2014

Apache TomEE + NoSQL (writing your own resources)

We keep learning,

Alex.

Et j’ai sa main!, Jour prospère!, Me voici, Militaire et mari! (Ah mes amis - Gaetano Donizetti)

Music: https://www.youtube.com/watch?v=3aS6M8j3pvQ

Etiquetas: Apache TomEE, nosql, patterns, resources

martes, febrero 18, 2014

Learn CDI with Apache TomEE [Video tutorials]

Servlets

CDI (Inject)

CDI (Scopes)

CDI (Name Qualifiers)

CDI (Qualifiers)

CDI (Alternatives)

CDI (Postconstruct)

CDI (Producer)

CDI (Events)

CDI (Interceptors)

CDI (Decorators)

We Keep Learning,

Que vas a hacer sin mí, Cuando tú te enteres que you estoy pegao, Con el que sabe no se juega, Y si se juega con cuidado (El Cuchi Cuchi - Mayimbe)

Etiquetas: Apache TomEE, CDI, Context Dependency Injection, java ee, Java EE 6, JavaEE

lunes, febrero 17, 2014

Aliens have invaded Undertow

What is Undertow?

Writing tests for Undertow

- Embedded Servlet Deployment

- Non-blocking handler

Maven Artifacts

Embedded Servlet Deployment

Non-blocking handler

Configuration

So now you can write tests for Undertow container within the context of Arquillian.

We keep learning,

Alex.

Oh django! After the showers is the sun. Will be shining... (Django - Luis Bacalov & Rocky Roberts)

Music: https://www.youtube.com/watch?v=UX3h22aABIc

Etiquetas: arquillian, integration tests, undertow, unit testing

lunes, enero 27, 2014

DRY with examples on documentation. Include Java Extension for Asciidoctor.

Using tags:

The attributes you can set in include are:

- imports: adds the imports.

- fields: adds the fields of the main class.

- class: adds all the content of the main class.

- enum=<classname>: adds the enum with the given name. The enum should be defined inside the class we are adding.

- annotation=<classname>: same as enum but for annotations.

- class=<classname>: same as enum but for inner classes.

- contructor=<constructorName>(<parameterType1>,<parameterType2>, ....): adds the defined constructor. In this case we must set the constructor name and the type of each parameter. For example: MyClass(String).

- method=<returnType> <methodName>(<parameterType1>, <parameterType2>, ...): same as constructor but for methods, which implies adding the return type. Note that it is not required to add any modifier nor throws classes.

Now we can use our classes within our documentation and by adding the required block.

This version of plugin works with Java 1.7 and before, not with Java 1.8 but it could work in some cases.

The extension is published on bintray, so to install you simply have to add bintray repository and the required dependency:

The project is hosted at: https://github.com/lordofthejars/asciidoctorj-extensions

We keep learning,

Alex.

I feel shouting ya-hoo, And me still feeling hungry, Cowabunga!!, Cookie monster went and ate the new red two. Monster Went and Ate My Red 2 - Elvis Costello & ElmoMusic: https://www.youtube.com/watch?v=KxardpBReQc

Etiquetas: asciidoc, asciidoctor, documentation, java 1.7