When you are using JSON Web Token (JWT), or any other token technology that requires to sign or encrypt payload information, it is important to set an expiration date to the token, so if the token expires, you can either assume that this might be considered a security breach and you refuse any communication using this token, or you decide to enable the token by updating it with new expiry date.

But it is also important to use some kind of secret rotation algorithm, so the secret used to sign or encrypt a token is periodically updated, so if the secret is compromised the tokens leaked by this key is less. Also in this way you are decreasing the probability of a secret being broken.

There are several strategies for implementing this, but in this post, I am going to explain how I implemented secret rotation in one project I developed some years ago to sign JWT tokens with HMAC algorithm.

I am going to show how to create a JWT token in Java.

Notice that what you need to do here is creating an algorithm object setting HMAC algorithm and set a secret that is used to sign and verify instance.

So what we need is to rotate this algorithm instance every X minutes, so the probability of breaking the secret, and that the broken secret is still valid, becomes very low.

So how to rotate secrets? Well, with a really simple algorithm that everyone (even if you are not a crypto expert) can understand. Just using time.

So to generate the secret, you need a string, in the previous example was secret String, of course, this is not so secure, so the idea is to compose this secret string by a root (something we called the big bang part) + a shifted part time. In summary the secret is <bigbang>+<timeInMilliseconds>

Bing bang part has no mystery, it is just a static part, for example, my_super_secret.

The interesting part is the time part. Suppose you want to renew secret every second. You only need to do this:

I am just putting 0s to milliseconds part, so if I run this I get something like:

1515091335543

1515091335500

1515091335500

Notice that although between second and third print, it has passed 50 milliseconds, the time part is exactly the same. And it will be the same during the same second.

Of course, this is an extreme example where the secret is changed every second but the idea is that you remove the part of the time that you want to ignore, and fill it with 0s. For this reason, first, you are dividing the time and then multiply by the same number.

For example, suppose that you want to rotate the secret every 10 minutes you just need to divide and multiply for 600000.

There are two problems with this approach that can be fixed although one of them is not really a big issue.

The first one is that since you are truncating the time if you want to change the secret every minute and for example, the first calculation occurs in the middle of a minute, then for just this initial case, the rotation will occur after 30 seconds and not 1 minute. Not a big problem and in our project, we did nothing to fix it.

The second one is what's happening with tokens that were signed just before the secret rotation, they are still valid and you need to be able to verify them too, not with the new secret but with previous one.

To fix this, what we did was to create a valid window, where the previous valid secret was also maintained. So when the system receives a token, it is verified with the current secret, if it passes then we can do any other checks and work with it, if not then the token is verified by the previous secret. If it passes, the token is recreated and signed with the new secret, and if not then obviously this token is invalid and must be refused.

To create the algorithm object for JWT you only need to do something like:

What I really like about this solution is:

- It is clean, no need for extra elements on your system.

- No need for triggered threads that are run asynchronously to update the secret.

- It is really performant, you don't need to access an external system.

- Testing the service is really easy.

- The process of verifying is responsible for rotating the secret.

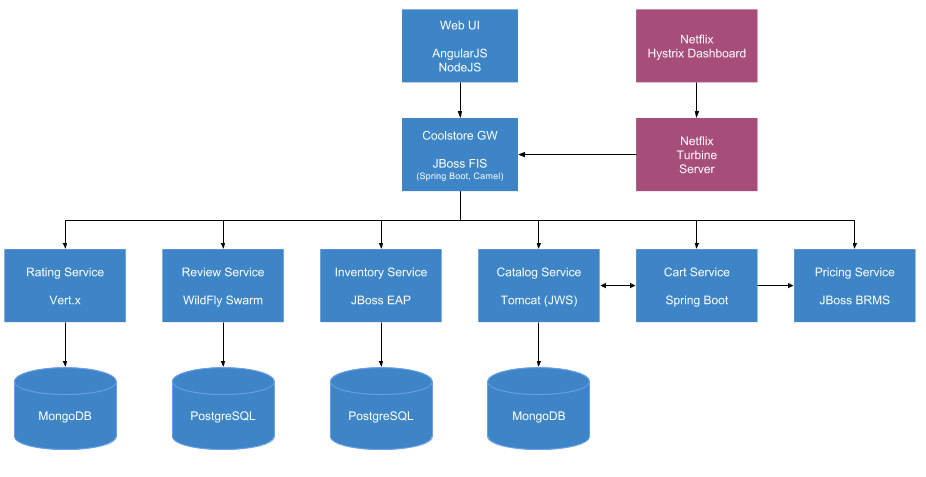

- It is really easy to scale, in fact, you don't need to do anything, you can add more and more instances of the same service and all of them will rotate the secret at the same time, and all of them will use the same secret, so the rotating process is really stateless, you can scale up or down your instances and all instances will continue to be able to verify tokens signed by other instances.

But of course there are some drawbacks:

- You still need to share a part of the secret (the big bang part) to each of the services in a secure way. Maybe using Kubernetes secrets, Vault from Hashicorp or if you are not using microservices, you can just copy a file into a concrete location and when the service is up and running, read the big bang part, and then just remove it.

- If your physical servers are in different time zones, then using this approach might be more problematic. Also, you need that the servers are more or less synchronized. Since you are storing the previous token and current token, it is not necessary that they are synced in the same second and some seconds delay is still possible without any issue.

So we have seen a really simple way of rotating secrets so you can keep your tokens safer. Of course, there are other ways of doing the same. In this post, I just have explained how I did it in a monolith application we developed three years ago, and it worked really well.

We keep learning,

Alex.

You just want attention, you don't want my heart, Maybe you just hate the thought of me with someone new, Yeah, you just want attention, I knew from the start, You're just making sure I'm never gettin' over you (Attention - Charlie Puth)Music: https://www.youtube.com/watch?v=nfs8NYg7yQM

Follow me at https://twitter.com/alexsotob

/cdn.vox-cdn.com/uploads/chorus_image/image/49523369/20150428-cloud-computing.0.jpg)